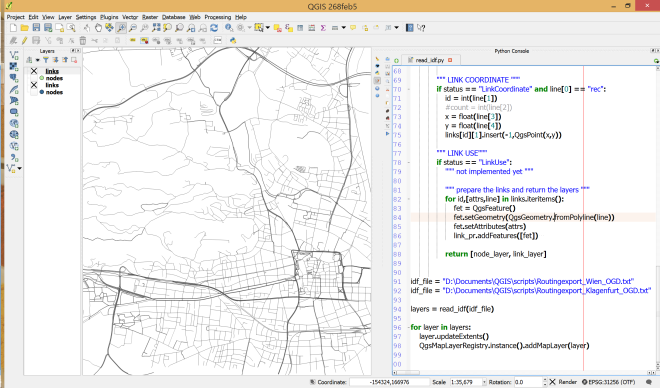

In the previous post, we investigated how to bring QGIS maps into Jupyter notebooks.

Today, we’ll take the next step and add basemaps to our maps. This is trickier than I would have expected. In particular, I was fighting with “invalid” OSM tile layers until I realized that my QGIS application instance somehow lacked the “WMS” provider.

In addition, getting basemaps to work also means that we have to take care of layer and project CRSes and on-the-fly reprojections. So let’s get to work:

from IPython.display import Image

from PyQt5.QtGui import QColor

from PyQt5.QtWidgets import QApplication

from qgis.core import QgsApplication, QgsVectorLayer, QgsProject, QgsRasterLayer, \

QgsCoordinateReferenceSystem, QgsProviderRegistry, QgsSimpleMarkerSymbolLayerBase

from qgis.gui import QgsMapCanvas

app = QApplication([])

qgs = QgsApplication([], False)

qgs.setPrefixPath(r"C:\temp", True) # setting a prefix path should enable the WMS provider

qgs.initQgis()

canvas = QgsMapCanvas()

project = QgsProject.instance()

map_crs = QgsCoordinateReferenceSystem('EPSG:3857')

canvas.setDestinationCrs(map_crs)

print("providers: ", QgsProviderRegistry.instance().providerList())

To add an OSM basemap, we use the xyz tiles option of the WMS provider:

urlWithParams = 'type=xyz&url=https://tile.openstreetmap.org/{z}/{x}/{y}.png&zmax=19&zmin=0&crs=EPSG3857'

rlayer = QgsRasterLayer(urlWithParams, 'OpenStreetMap', 'wms')

print(rlayer.crs())

if rlayer.isValid():

project.addMapLayer(rlayer)

else:

print('invalid layer')

print(rlayer.error().summary())

If there are issues with the WMS provider, rlayer.error().summary() should point them out.

With both the vector layer and the basemap ready, we can finally plot the map:

canvas.setExtent(rlayer.extent())

plot_layers([vlayer,rlayer])

Of course, we can get more creative and style our vector layers:

vlayer.renderer().symbol().setColor(QColor("yellow"))

vlayer.renderer().symbol().symbolLayer(0).setShape(QgsSimpleMarkerSymbolLayerBase.Star)

vlayer.renderer().symbol().symbolLayer(0).setSize(10)

plot_layers([vlayer,rlayer])

And to switch to other basemaps, we just need to update the URL accordingly, for example, to load Carto tiles instead:

urlWithParams = 'type=xyz&url=http://basemaps.cartocdn.com/dark_all/{z}/{x}/{y}.png&zmax=19&zmin=0&crs=EPSG3857'

rlayer2 = QgsRasterLayer(urlWithParams, 'Carto', 'wms')

print(rlayer2.crs())

if rlayer2.isValid():

project.addMapLayer(rlayer2)

else:

print('invalid layer')

print(rlayer2.error().summary())

plot_layers([vlayer,rlayer2])

You can find the whole notebook at: https://github.com/anitagraser/QGIS-resources/blob/master/qgis3/notebooks/basemaps.ipynb