It’s my pleasure to share with you that Trajectools 2.0 just landed in the official QGIS Plugin Repository.

This is the first version without the “experimental” flag. If you look at the plugin release history, you will see that the previous release was from 2020. That’s quite a while ago and a lot has happened since, including the development of MovingPandas.

Let’s have a look what’s new!

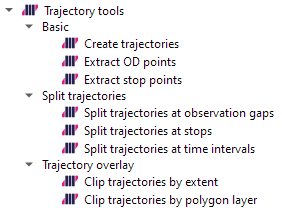

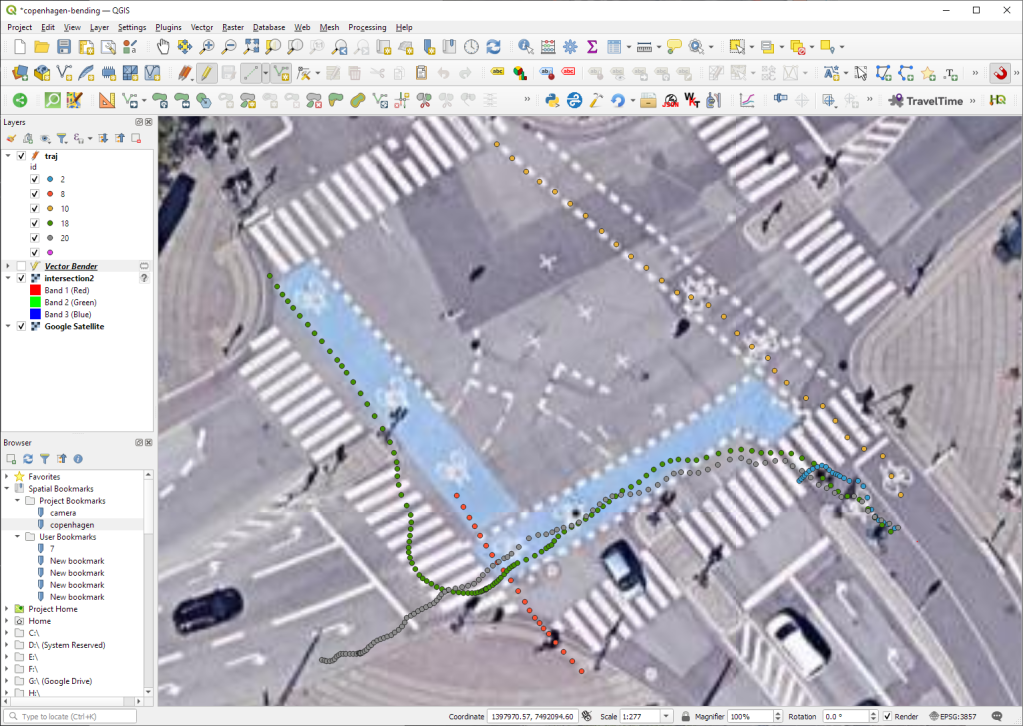

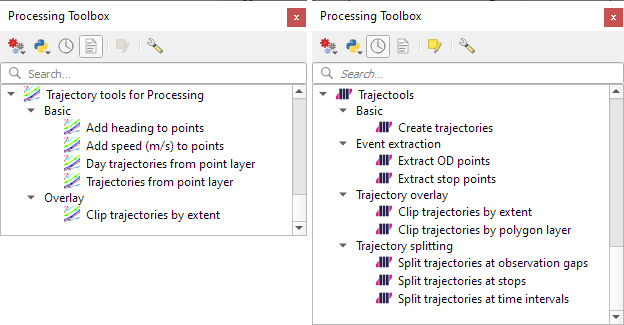

The old “Trajectories from point layer”, “Add heading to points”, and “Add speed (m/s) to points” algorithms have been superseded by the new “Create trajectories” algorithm which automatically computes speeds and headings when creating the trajectory outputs.

“Day trajectories from point layer” is covered by the new “Split trajectories at time intervals” which supports splitting by hour, day, month, and year.

“Clip trajectories by extent” still exists but, additionally, we can now also “Clip trajectories by polygon layer”

There are two new event extraction algorithms to “Extract OD points” and “Extract OD points”, as well as the related “Split trajectories at stops”. Additionally, we can also “Split trajectories at observation gaps”.

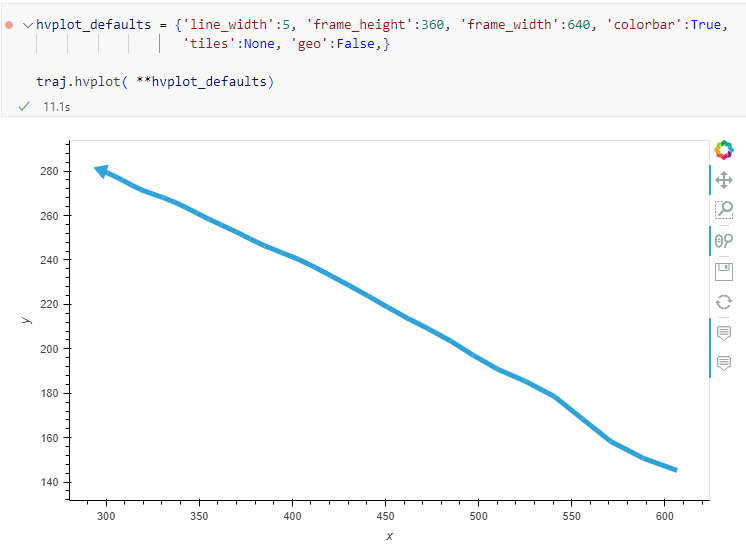

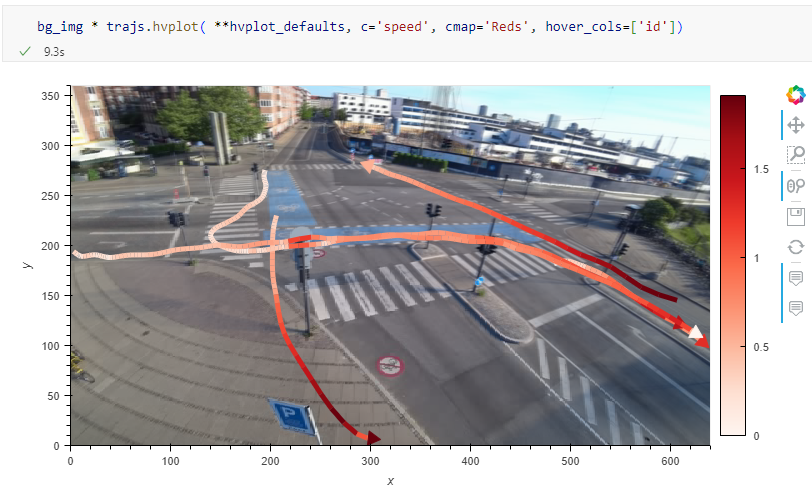

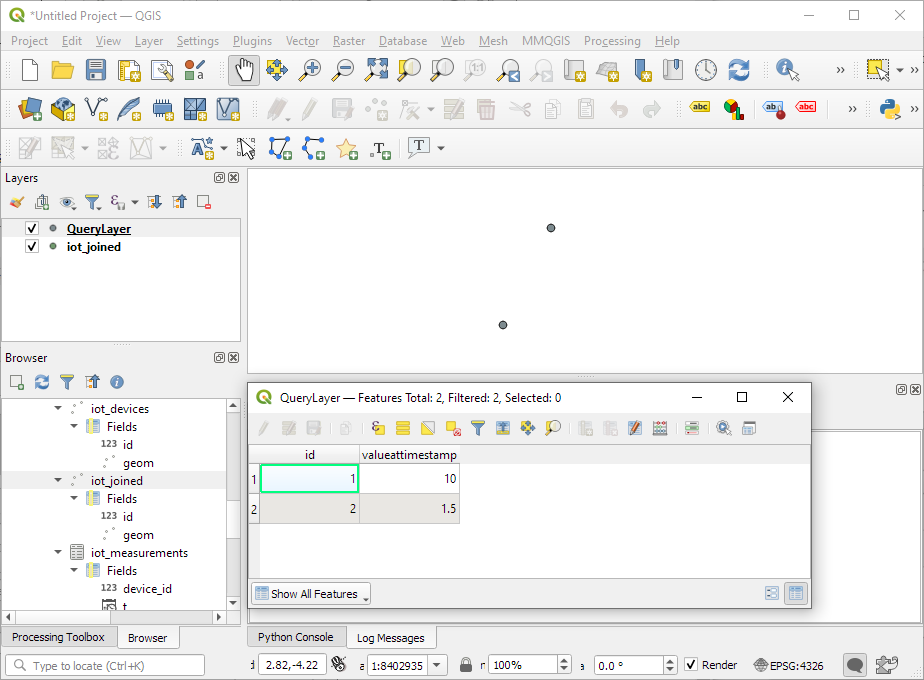

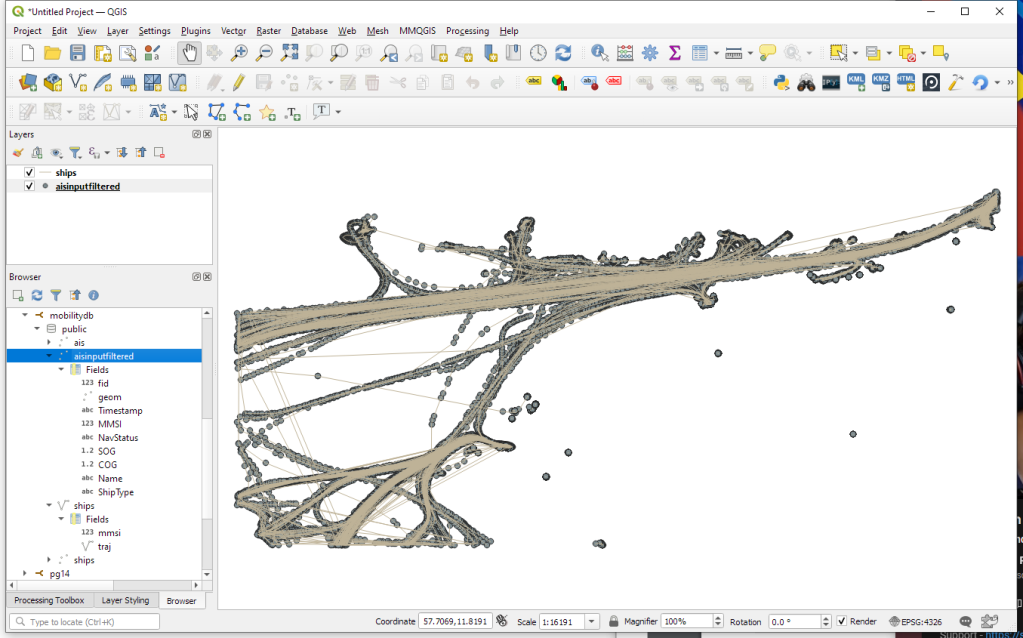

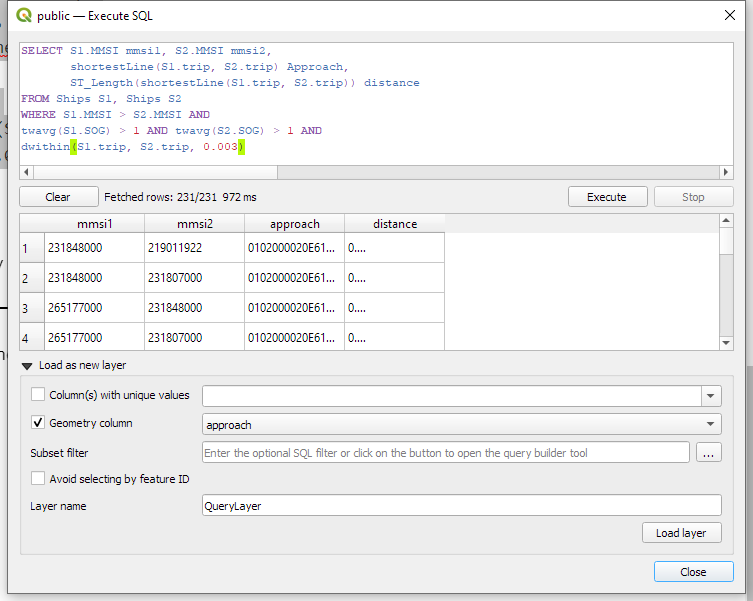

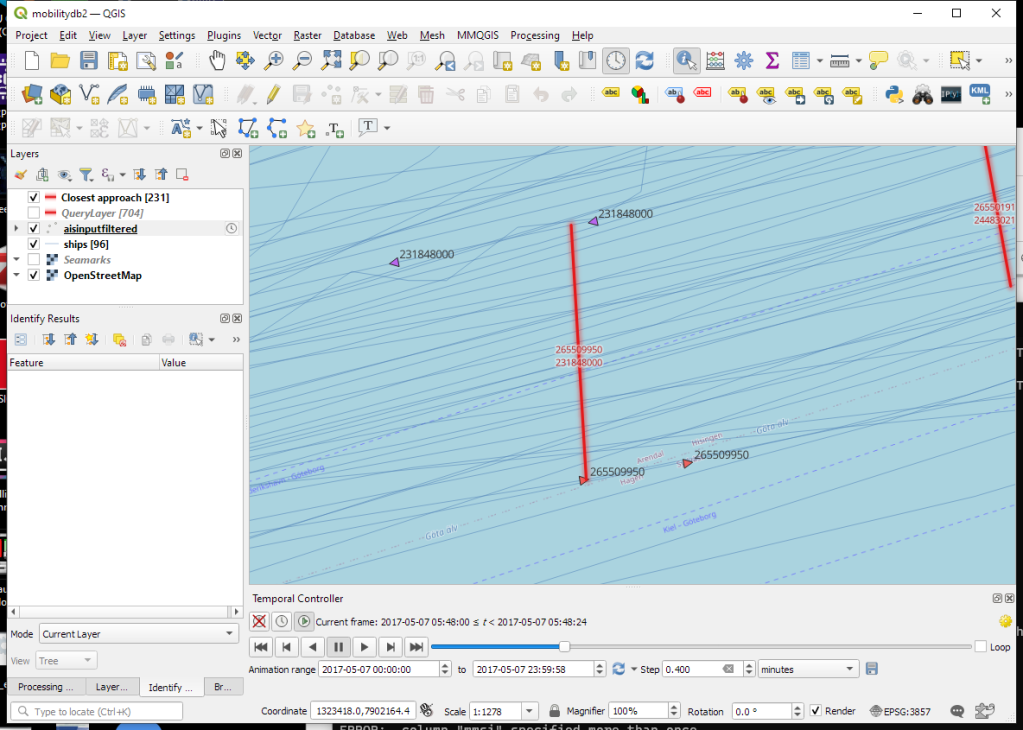

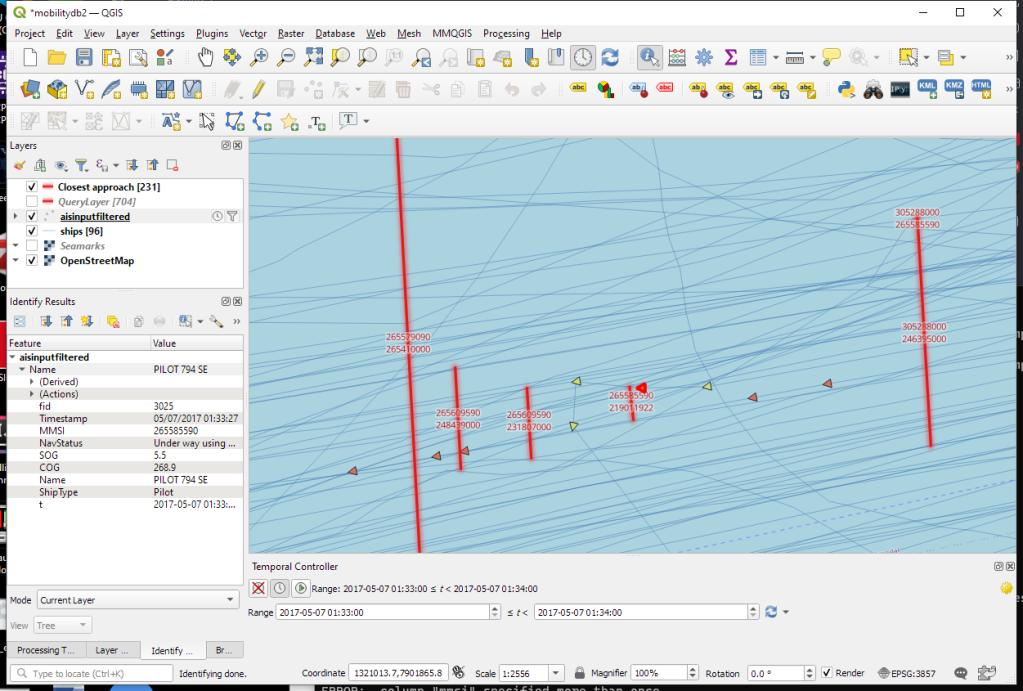

Trajectory outputs, by default, come as a pair of a point layer and a line layer. Depending on your use case, you can use both or pick just one of them. By default, the line layer is styled with a gradient line that makes it easy to see the movement direction:

while the default point layer style shows the movement speed:

How to use Trajectools

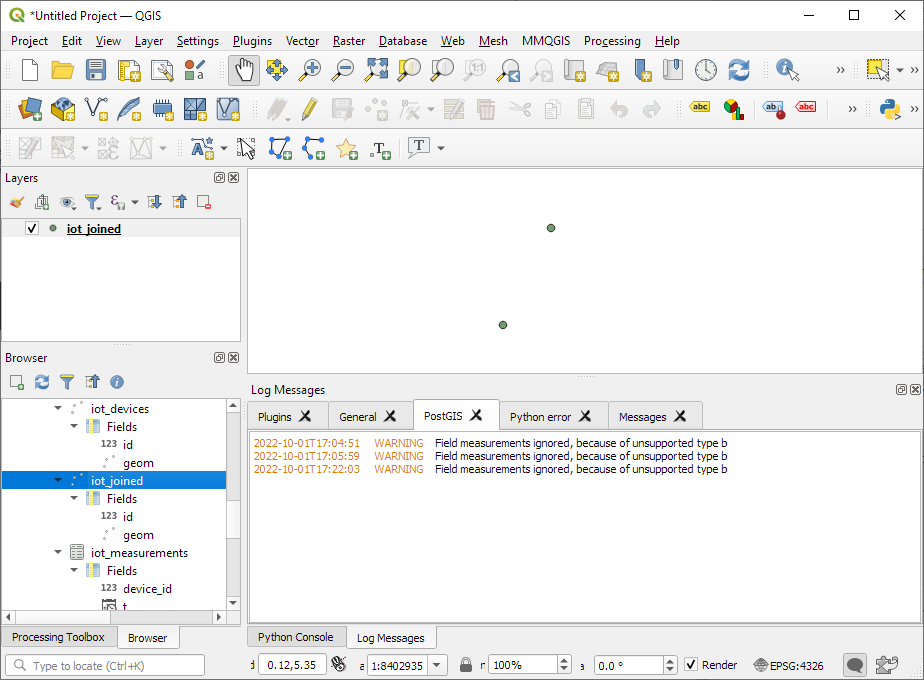

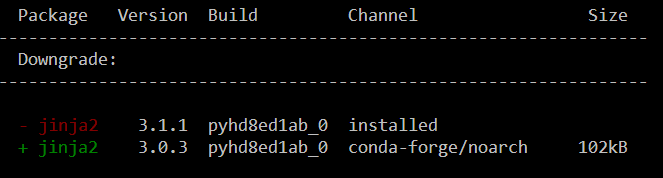

Trajectools 2.0 is powered by MovingPandas. You will need to install MovingPandas in your QGIS Python environment. I recommend installing both QGIS and MovingPandas from conda-forge:

(base) conda create -n qgis -c conda-forge python=3.9

(base) conda activate qgis

(qgis) mamba install -c conda-forge qgis movingpandas

The plugin download includes small trajectory sample datasets so you can get started immediately.

Outlook

There is still some work to do to reach feature parity with MovingPandas. Stay tuned for more trajectory algorithms, including but not limited to down-sampling, smoothing, and outlier cleaning.

I’m also reviewing other existing QGIS plugins to see how they can complement each other. If you know a plugin I should look into, please leave a note in the comments.